Over the last few months, we’ve talked with many startups looking for tactics they can use to try out new ideas quickly. These aren’t strictly Minimum Viable Products, but rather features they think might work—because of a hunch, anecdotal user feedback, a pattern in their data, or a competitor’s feature set.

But what’s the right way to confirm or deny this hypothesis? Here are a few tactics you can try out to test whether a feature has legs, without investing a lot of time and effort into development or gold-plating.

But what’s the right way to confirm or deny this hypothesis? Here are a few tactics you can try out to test whether a feature has legs, without investing a lot of time and effort into development or gold-plating.

All strategies for testing have to overcome a few fundamental challenges:

- You can’t handle the truth. Everyone wants to say nice things, so make it as hard as possible for them to lie to you.

- You don’t want to get quantitative too soon. You might be tempted to start crunching numbers right away, but do so too soon and you’ll optimize for a local maximum instead of finding something adjacent that’s more powerful and useful. So find a way to collect qualitative exceptions.

- The test needs to be as close to the finished product or feature you intend to launch. So figure out how to make it real.

Survey

Perhaps the most common way to collect data, surveys also have one of the lowest response rates, and they’re farthest from the truth. But done right, surveys tell you several things:

- Which of several taglines/subject lines work best.

- The data in the survey itself.

- Willingness to follow up.

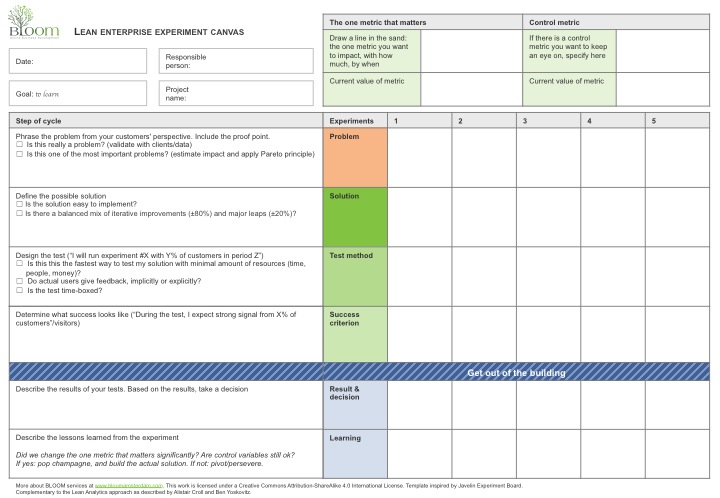

Since survey response rates drop dramatically based on the expectation someone has of how much work is involved, we’ve had the best response rates with a single question, embedded in the mail itself. Google Forms lets you do this easily.

Recently I surveyed no-show attendees for an online event I ran. We had a 33% (197/600) response rate—which is really good—and some great insights into why no-shows didn’t attend. Here’s what I did:

First, I created a form in Google Docs. I made sure it was simple and short—a single question—with one exception: if respondents said they registered but couldn’t make it, I apologized profusely for asking them a second question, and then dug in for more detail.

Second, I used Google’s form interface to send the mail to the attendees. Google automatically adds the “flag this as spam” stuff, so a single mail of this kind complies with most spam laws.

Third, I tweaked the subject line so it said, “Can you answer this one question about the event on January 7?” This set the expectation that there wasn’t a lot of work involved. The mail itself contained the embedded form, with the single question—embedding is key.

Within 24 hours, 200 people had told me why they couldn’t attend.

Note that if this were a startup, rather than an audience that had registered, I would have been asking whether it were OK for me to follow up with them through another channel to collect deeper insights. But the key points here are:

- A short, simple question embedded in the mail.

- The right subject line.

- Expectation that there won’t be work involved.

Phone a friend

If you’ve read Lean Analytics, you know people lie, particularly when they want to make you feel better. One slightly sideways tactic that can work is to ask an interview subject to phone a friend, explain the product to them, and ask for their feedback, while you listen in.

If you’ve read Lean Analytics, you know people lie, particularly when they want to make you feel better. One slightly sideways tactic that can work is to ask an interview subject to phone a friend, explain the product to them, and ask for their feedback, while you listen in.

This gets them out of their comfort zone. A friend of a friend doesn’t owe you anything, so there’s more honesty; plus, their desire to placate you is trumped (or at least mitigated) by their desire to not annoy their friend.

You’ll discover:

- Whether they are reluctant to tell others about it (an important fact, and a suggestion that the product, service, or feature might not be as viral as you’d hoped.)

- Whether they can explain it properly (which tells you if your value proposition is easy to understand, remain, and convey.)

- Whether their friend immediately understands the value, and what questions they want answered (which shows if you’ve nailed a simple, ubiquitous problem.)

- Whether their friend wants to gain access to the thing once they know about it (a leading indicator of demand or cycle time.)

You might also get new lead. This is a particularly useful, albeit somewhat subversive, tactic for B2B companies hoping for referrals and endorsements.

Headcams and stop-motion cameras

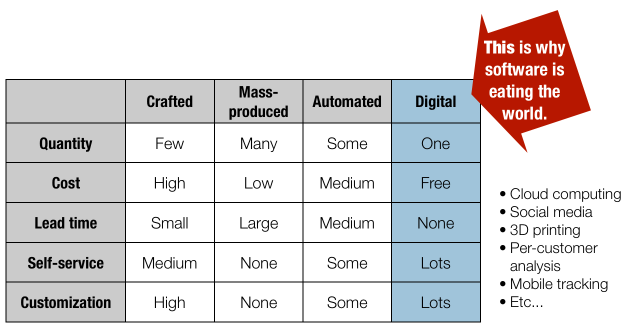

There’s a huge rise in blended physical/virtual startups, and this is only going to grow as small-order supply chains, 3D prototyping, and the Internet of Things become commonplace. If your product or service is used in a specific way—for example, in a retail environment—or includes a custom physical component, there’s no substitute for real-world testing.

There’s a huge rise in blended physical/virtual startups, and this is only going to grow as small-order supply chains, 3D prototyping, and the Internet of Things become commonplace. If your product or service is used in a specific way—for example, in a retail environment—or includes a custom physical component, there’s no substitute for real-world testing.

Let’s say you have a startup that expects someone to scan the barcodes on everything they eat, and then suggests a meal plan they might like. Even before you’ve written a line of code, you have a big assumption: people will do what you hope. The process might be unwieldy, or easy to forget.

Fortunately, you can instrument this. Small, long-duration cameras like a GoPro, as well as stop-motion cameras like the Brinno TLC 200 Pro (I own both) can give you an extra perspective on how your idea functions in the real world. The videos are easy to analyze after the fact, and small enough for someone to explain and share. In the example above, you might put a Gopro on someone’s lapel in wide-angle mode and ask them to go grocery shopping. Then later, review the footage with them and ask why they did what they did.

Watch someone use a competitor’s app

Thinking of building a feature because a competitor offers it? You’d be daft not to get people to show you how they use it. Set up Skype or some other screen-sharing tool and have them use the feature you’re considering. Watch where they get stuck, and do that better. Watch which things they don’t use, and push them farther down the roadmap.

If you have the patience to do this with ten people using a tool like Screenflow, you can capture their keystrokes and mouse clicks. Then build a list of these clicks and keys, and find the most common path through the competing application. Build just that.

If you combine this approach with the one below—the Button to Nowhere—you’ve built a perfect Minimum Viable Feature with sufficient capture of exceptions to be sure you haven’t missed an obvious, adjacent capability.

Button to Nowhere

Let’s say you’re thinking of adding a reports tool with which users can generate some basic reporting data. You don’t know if the reports will be popular; if anyone will even notice them; and which reports will be commonly generated out of the box.

Let’s say you’re thinking of adding a reports tool with which users can generate some basic reporting data. You don’t know if the reports will be popular; if anyone will even notice them; and which reports will be commonly generated out of the box.

Try this:

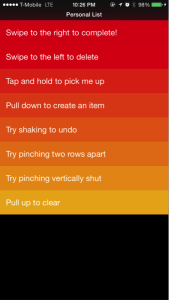

- Create the button. Note that this is where you can test the button name (“Reports”, “Trends”, “History”, etc.), its location, and its color, then use regression analysis to see which gets the most clicks.

- When someone clicks the button, send them to a page with a survey form. Say, “we’re nearly finished this report feature; in the meantime, can you describe what you were hoping to find when you clicked the button?”

- Collect the feedback and the user’s identity.

- When you’ve got enough data to know whether the feature is worth building—and how users hope to use it—use the feedback to build the “default” reports that are displayed on the page.

- This is the icing on the cake: Contact each customer who suggested one of the reports you’ve now made a default. Tell them the feature is there because they said so. Never mind that others did, too; they’ll feel like a personal contributor to your product.

This is one of my favorite approaches for getting features right. Done right, you’ll build the right things first, create a good list of default settings, and make your users feel like unique and special snowflakes.

Signup form

The most basic test for a product is a signup form, a la Launchrock. Ben and I did this before the book came out; our mailing list now numbers over 6,000 people. It should be the first thing you do once you can clearly describe the value you’re going to offer people.

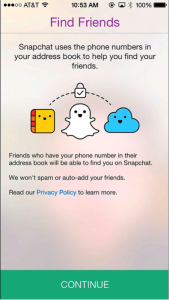

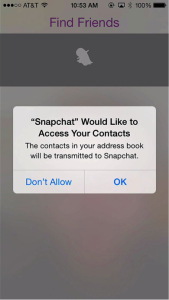

But signup forms aren’t just for whole products. A new feature—particularly one that’s in demand—is another chance to grow your list of contacts with whom you have permission to communicate. Describe the function, feature, or benefit and ask people if they want to discuss it.

Once you have a list of interested users, you might create a mailing list, or a shared platform to discuss the features. There are some commercial tools like Spigit that organize user feedback; there are others like Pligg that are more open, like a private version of reddit. This kind of open feedback platform works better for B2B organizations where the ratio of good content to spam will be relatively high—for a B2C feature, you might spend all your time moderating comments.

People often forget that the signup form is also the first chance you have to segment your users. They want in; ask them some questions that you think will help you to better target products, features, and pricing.

Prototype

You can get good feedback from users with a prototype. Sometimes a simple wireframe like those from Balsamiq is enough; in some cases you can get away with screenshots in a Powerpoint, Keynote, or Acrobat slide deck. Some folks like paper prototypes, too.

The challenge with any of these is twofold. First, it’s artificial, so you aren’t getting an accurate view of how people will engage with the feature, product, or service. And second, it’s hard to quantify and analyze how well the user is interacting.

One way to generate quantitative analytics from prototypes is to give the user a set of vague goals (“invite a friend,” for example, or “create a profile”) and then record:

- Could they accomplish the goal?

- How long did it take?

- How many corrections/”back” buttons did they perform?

- In what steps did they stall?

Don’t just build prototypes. Treat your key goals as you would conversion funnels as you watch test subjects go through them.

What would Bob do?

I’ve tried this many times at conferences, and the results are nearly always the same. First, I ask “how many of you smoke pot, by a show of hands?” Few people raise their arms. Then I say, “how many of you think that at least 50% of Americans smoke pot?” I get many more hands the second time.

Turns out that we all think we’re normal—so we assume others are like us (technically the False Consensus Effect.) Asking your subject how they think someone else would feel is often a better way to get an answer that’s closer to their own, true answer.

False payment

We’ve said before that people don’t like to lie. So you need to make it painful for them to do so. One way to accomplish this is to ask them to get out their wallet.

If you have a new feature, pop up a mockup of it when users log in, tell them it’s $30 a month more, and see how many click on it. If you’ve got some downloadable content you want to charge for, offer it up and see what happens.

Then don’t take their money.

This does two important things:

- It shows you whether people are really interested in a feature. You can charge later, but give it to them for free.

- It allows you to test pricing. If I charge $10 to one user and $100 to another, and they find out, bad things can happen. But if I ask one to pay $10, one to pay $100, and then give it to them both for free for some time, I’ve learned that I was grossly under-charging for my product.

There’s one danger here: anchoring. Users will learn what the “right” price is when you ask them to pay it. To avoid this, make sure the final feature—the one for which you actually charge—includes enough extra functionality they’ll re-evaluate their perception of the initial value.

So that’s our list. What did we miss?

Recently,

Recently,